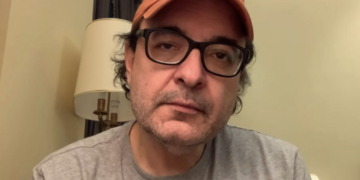

The AI industry is rapidly growing and has already started to make a significant impact on various sectors of society. From machine coding to game design, AI systems like ChatGPT have become widely adopted and have proven to be useful in many applications. However, along with its benefits, AI also brings potential harms that need to be addressed. In his book, “Containing Big Tech: How to Protect Our Civil Rights, Economy, and Democracy,” author Tom Kemp argues that regulation is necessary to ensure the responsible and ethical use of AI.

Dr. Timnit Gebru, the founder of the Distributed Artificial Intelligence Research Institute (DAIR), emphasizes the need for principles, standards, governing bodies, and accountability when it comes to confronting AI bias. She suggests the establishment of an equivalent to the Food and Drug Administration (FDA) to regulate AI. This would involve AI impact assessments being submitted to the Federal Trade Commission (FTC), similar to how drug submissions are handled by the FDA. These assessments would focus on AI systems in areas with high-impact, such as housing, employment, and credit, to address issues like digital redlining. This proposed regulation promotes transparency and accountability for the benefit of consumers.

In 2022, the Biden Administration’s Office of Science and Technology Policy (OSTP) proposed a “Blueprint for an AI Bill of Rights,” which includes the right for individuals to know and understand how automated systems impact them. This idea could be integrated into the rulemaking responsibilities of the FTC if the proposed legislation, the Algorithmic Accountability Act (AAA), or the Algorithmic Deepfake Protection Act (ADPPA), are passed. The goal is to ensure that AI systems are not complete black boxes to consumers and that they have the right to object to their use in certain contexts, similar to how individuals have rights over the collection and processing of personal data.

To enhance accountability and transparency, Kemp suggests the introduction of AI certifications. For instance, just like the finance industry has certified public accountants (CPAs) and certified financial audits, AI professionals should have equivalent certifications. Additionally, codes of conduct and industry standards should be established for the use of AI. The International Organization for Standardization (ISO) is already working on developing AI risk management standards, and the National Institute of Standards and Technology (NIST) has released its initial framework for AI risk management. These efforts aim to ensure that the use of AI follows established guidelines and best practices.

Diversity and inclusivity in AI design teams are also essential. Olga Russakovsky, an assistant professor at Princeton University, points out that diversifying the pool of people building AI systems will lead to less biased AI systems. In addressing antitrust concerns, regulators need to focus on the expanding AI sector. Acquisitions of AI companies by Big Tech firms should be closely scrutinized to ensure fair competition. Furthermore, the government could consider mandating open intellectual property for AI, similar to the 1956 federal consent decree with Bell. This would prevent the concentration of AI technology in the hands of a few companies and foster innovation.

As AI continues to advance, it is crucial to prepare for the impact it will have on the workforce. Education and training should be provided to adapt to the changing job landscape in an AI-driven world. However, it is important to be strategic in this approach, as not everyone can be retrained to be a software developer. Additionally, as AI systems are increasingly automating the development of software programs, understanding the necessary skills for an AI world becomes critical. Economist Joseph E. Stiglitz warns that the profound changes brought about by AI require careful management to prevent polarization and strengthen democracy. Therefore, AI’s impact should be a net positive for society, starting with responsible practices from Big Tech companies.

It is essential to address the risks and threats associated with AI. The collection and processing of sensitive data by Big Tech companies pose significant concerns. How AI is used to analyze this data and make automated decisions is equally important. To mitigate these risks, regulatory measures must be in place to ensure responsible and ethical AI practices. Just as we need to regulate digital surveillance, preventing the misuse of AI by Big Tech is crucial to avoid opening Pandora’s box.

In conclusion, the rapid growth of the AI industry necessitates regulation to balance its benefits and potential harms. Establishing governing bodies, introducing regulation and certification processes, promoting diversity in AI design teams, examining antitrust issues, and preparing for AI’s impact on the workforce are all crucial steps in containing the power of AI. By implementing responsible practices and ensuring transparency, society can reap the benefits of AI while protecting civil rights, the economy, and democracy.